Greetings! I'm Aneesh Sreedharan, CEO of 2Hats Logic Solutions. At 2Hats Logic Solutions, we are dedicated to providing technical expertise and resolving your concerns in the world of technology. Our blog page serves as a resource where we share insights and experiences, offering valuable perspectives on your queries.

Every day, your smartphone unlocks by recognizing your face. Self-checkout systems identify products without scanning barcodes. Factory lines catch microscopic defects that human inspectors might miss. Behind these capabilities is computer vision, AI that sees, interprets, and acts on visual information.

For business leaders exploring AI applications, computer vision offers some of the most tangible and measurable returns. From reducing operational costs to enabling entirely new customer experiences, vision AI is moving from experimental to essential across industries.

This guide breaks down what computer vision is, how it works, and most importantly, how to evaluate whether it makes sense for your use case. Whether you’re a founder scoping an MVP, a product manager assessing feasibility, or an engineer exploring practical applications, you’ll find clear explanations, real-world examples, and actionable next steps.

What Is Computer Vision?

Computer vision is a field of artificial intelligence that enables machines to interpret and understand visual information from images and videos. Just as humans use their eyes and brain to recognize objects, read text, and navigate spaces, computer vision systems use cameras and algorithms to extract meaning from visual data.

Unlike basic image processing, which transforms or enhances images, computer vision interprets what’s actually in those images. It can identify a defective product on a manufacturing line, count items on a retail shelf, or detect safety hazards in real time.

Computer vision systems accept inputs like photos, video streams, depth sensors, or thermal cameras, and produce outputs such as labels, bounding boxes around objects, or segmentation masks that outline specific regions.

How Computer Vision Works

Computer vision follows a structured pipeline from data capture to deployment:

Data Capture and Collection: Cameras, mobile devices, or edge sensors gather visual information. This could be retail store footage, medical scans, or drone imagery.

Annotation and Labeling: Human annotators draw bounding boxes around objects, create polygon masks for segmentation, or mark keypoints for pose estimation. Quality labeling is critical; your model learns from these examples.

Model Training: Deep learning models, particularly convolutional neural networks (CNNs) and transformers, learn patterns from labeled data. Transfer learning enables you to start with pre-trained models and fine-tune them for your specific task, thereby dramatically reducing the data and time required.

Evaluation: Models are tested on unseen data using metrics such as precision (the proportion of correct predictions), recall (the proportion of actual objects found), and mean average precision (mAP). For real-time applications, latency matters as much as accuracy.

Deployment and Monitoring: Models run in the cloud, on edge devices, or mobile phones. Post-deployment, teams monitor for model drift, when real-world conditions change and accuracy degrades, and collect feedback to retrain models.

Warning: Never evaluate your model only on training data or data similar to your training set. Always hold out 15-20% of your labeled data as a test set that the model never sees during training. This reveals true real-world performance and prevents overconfidence in deployment.

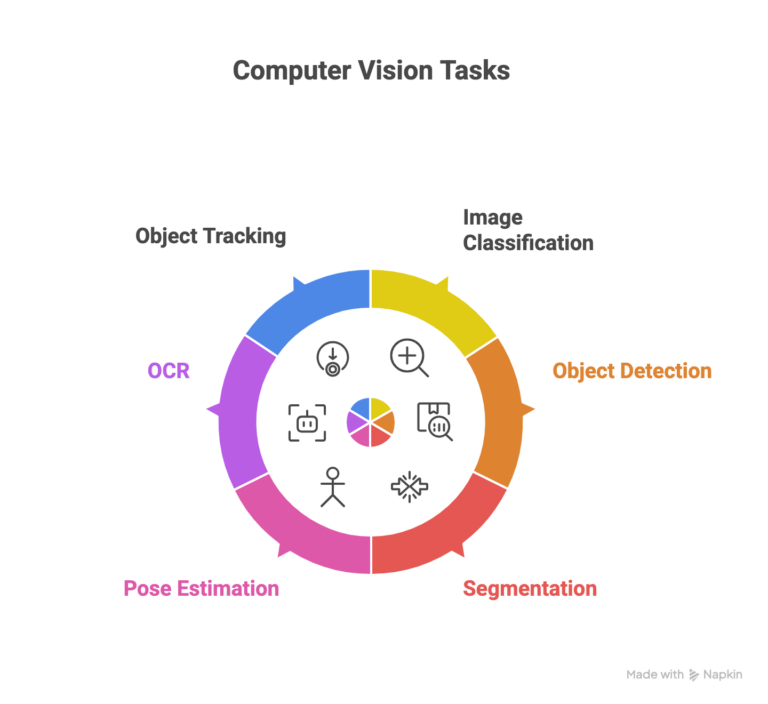

Key Computer Vision Tasks

Image Classification: Assigns a single label to an entire image. Example: identifying whether a product photo shows a shirt, shoes, or accessories.

Object Detection: Locates and labels multiple objects within an image using bounding boxes. YOLO (You Only Look Once) is a popular model for fast, real-time detection. Example: detecting all items in a shopping cart for checkout-free stores.

Semantic and Instance Segmentation: Semantic segmentation classifies every pixel in an image (e.g., road, sky, pedestrian). Instance segmentation goes further by distinguishing individual objects of the same class. Example: separating each person in a crowd for occupancy tracking.

Keypoint and Pose Estimation: Identifies specific points on objects or bodies. Example: tracking human poses for fitness apps or ergonomic monitoring in warehouses.

Optical Character Recognition (OCR): Extracts text from images. Example: digitizing invoices, reading license plates, or automating barcode scanning in logistics.

Object Tracking: Follows objects across video frames. Multi-object tracking (MOT) maintains identities of multiple objects over time, useful for security surveillance or sports analytics.

Exploring a computer vision project?

Real-World Applications by Industry

E-commerce and Retail: Product tagging automates catalog creation. Checkout-free stores use object detection to charge customers automatically. Shelf monitoring tracks inventory levels and planogram compliance. Returns triage routes products based on damage detection, reducing processing time by up to 40%.

Manufacturing: Defect detection systems inspect products at speeds humans can’t match, catching surface scratches, misalignments, or color variations. Line monitoring ensures equipment safety compliance. One automotive parts manufacturer reduced defect escape rates by 65% using vision-based quality control.

Logistics and Warehousing: OCR automation reads shipping labels and barcodes. Pallet counting via overhead cameras eliminates manual counts. Damage detection flags packages before they reach customers, cutting insurance claims.

Healthcare: Medical imaging triage prioritizes urgent cases like potential fractures or tumors. Anomaly detection flags irregular lab samples. Quality control systems verify medication packaging and dosage accuracy.

Real Estate and Smart Buildings: Occupancy analytics optimize HVAC and lighting. Security systems detect unauthorized access. Maintenance teams receive alerts for equipment issues like leaks or wear before they escalate.

Public Sector and Smart Cities: Traffic analytics monitor congestion and optimize signal timing. Incident detection alerts responders to accidents or hazards. Parking management guides drivers to available spots.

Pro Tip: When evaluating use cases, prioritize applications where vision can run continuously and accumulate value over time, like 24/7 defect detection, rather than one-off analyses. The compounding benefits justify higher upfront investment.

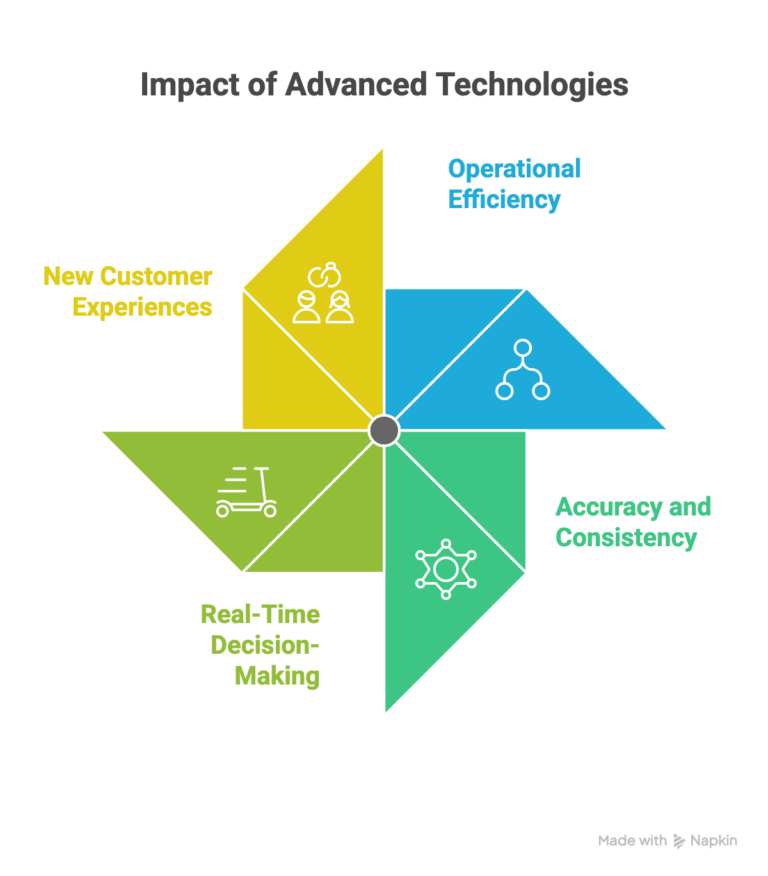

Benefits and Business Impact

Operational Efficiency: Automated inspection runs 24/7 without fatigue, processing thousands of items per hour. Labor costs shift from repetitive visual tasks to higher-value work.

Accuracy and Consistency: Well-trained models achieve 95%+ accuracy on focused tasks and don’t have bad days. Manual visual inspection accuracy typically ranges from 70-85% due to human factors.

Real-Time Decision-Making: Vision systems detect problems in milliseconds, enabling immediate response for safety incidents or production line stops.

New Customer Experiences: Visual search lets shoppers find products by uploading photos. AR try-on features reduce return rates by helping customers choose correctly.

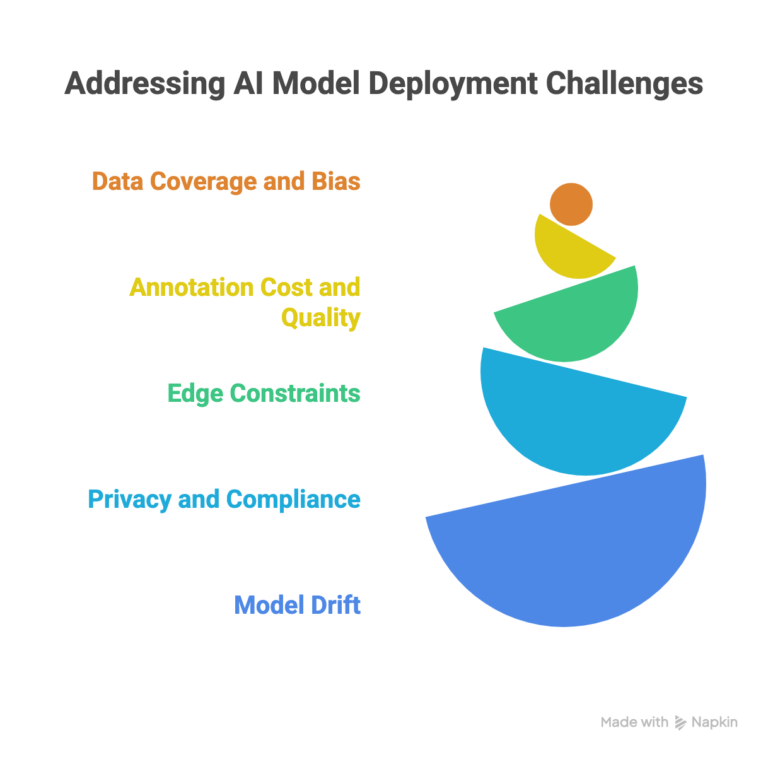

Challenges and Limitations

Data Coverage and Bias: Models struggle with scenarios absent from training data. A defect detector trained on daytime images may fail under different lighting. Edge cases and rare conditions require targeted data collection.

Annotation Cost and Quality: Labeling thousands of images is expensive and time-consuming. Inconsistent annotations degrade model performance. Budget $0.10-$5 per image depending on task complexity.

Edge Constraints: Deploying on cameras or mobile devices means working with limited computing power, memory, and battery life. Optimizing models for edge deployment often trades accuracy for speed.

Privacy and Compliance: Facial recognition and people tracking raise privacy concerns. GDPR, CCPA, and local regulations require careful data handling, consent mechanisms, and retention policies.

Model Drift: Accuracy degrades over time as real-world conditions change, new product packaging, seasonal lighting shifts, or evolving defect types. Continuous monitoring and retraining are essential.

Tech Stack and Tools

Data and Labeling: CVAT and Label Studio are open-source tools for creating training datasets with bounding boxes, polygons, and keypoints.

Frameworks: OpenCV provides traditional computer vision functions. PyTorch and TensorFlow are the leading deep learning frameworks for training custom models.

Pre-trained Models: ResNet and EfficientNet excel at image classification. YOLO handles real-time object detection. Mask R-CNN performs instance segmentation. Segment Anything Model (SAM) from Meta offers impressive zero-shot segmentation.

Deployment and Optimization: ONNX standardizes model formats across frameworks. TensorRT optimizes models for NVIDIA GPUs. Edge TPUs and neural processing units accelerate inference on devices.

Cloud Services: AWS Rekognition, Google Cloud Vision, and Azure Computer Vision offer APIs for quick proof-of-concepts. Custom models provide more control for specialized use cases.

Future Trends

Vision transformers are challenging CNNs as the go-to architecture, offering better performance with less inductive bias. Multimodal AI combines vision with language, enabling systems that can both see and describe what they see.

Weak supervision and synthetic data generation reduce labeling costs. Active learning identifies the most valuable images to label next. Edge AI hardware is becoming more powerful and affordable, enabling sophisticated vision applications in cameras costing under $100.

Weekly Insights

Get our best content delivered to your inbox every week.

Conclusion

The key to successful implementation isn’t having the most sophisticated model or the largest dataset, it’s starting with a focused problem, clear success metrics, and a pragmatic approach to data collection and deployment. Whether you’re automating defect detection, enhancing customer experiences, or improving safety compliance, computer vision offers a proven path from proof-of-concept to production impact.

Start small, measure relentlessly, and scale what works. Your first computer vision project might begin with just 500 labeled images and a pre-trained model running on a single camera. But with the right approach, it can evolve into a system that runs 24/7, processes millions of images, and delivers returns that compound year after year.

FAQ

How much training data do I need?

Transfer learning reduces requirements significantly. For focused tasks, 500-1,000 labeled images often suffice for initial models. Complex scenarios may need 10,000+ examples.

Can I do this without coding?

Cloud vision APIs and no-code platforms like Teachable Machine offer limited computer vision without programming. Serious applications require code for customization and integration.

What about privacy and security?

Minimize personal data collection, anonymize or blur faces when not required, implement access controls, and comply with regional regulations. Document your data handling practices clearly.

Related Articles