Greetings! I'm Aneesh Sreedharan, CEO of 2Hats Logic Solutions. At 2Hats Logic Solutions, we are dedicated to providing technical expertise and resolving your concerns in the world of technology. Our blog page serves as a resource where we share insights and experiences, offering valuable perspectives on your queries.

Here’s a statistic that should prompt every tech leader to pause: 95% of computer vision projects never reach production.

Not because the algorithms aren’t sophisticated enough. Not because GPUs aren’t powerful enough. But because somewhere between the demo and deployment, something breaks down, and breaks down hard.

That pristine model with 98% test accuracy? It fails to recognize basic objects when lighting shifts.

The object detection system that worked flawlessly in the lab? It struggles with real-world camera angles.

The face recognition tool trained on clean data? It can’t handle motion blur or partial occlusions.

The gap between research benchmarks and real-world reliability remains stubbornly wide. This isn’t a technology problem; it’s a knowledge gap in implementation.

This guide cuts through the hype to address what actually matters: the technical, operational, and strategic obstacles teams encounter when building production computer vision systems, and the proven solutions that actually work.

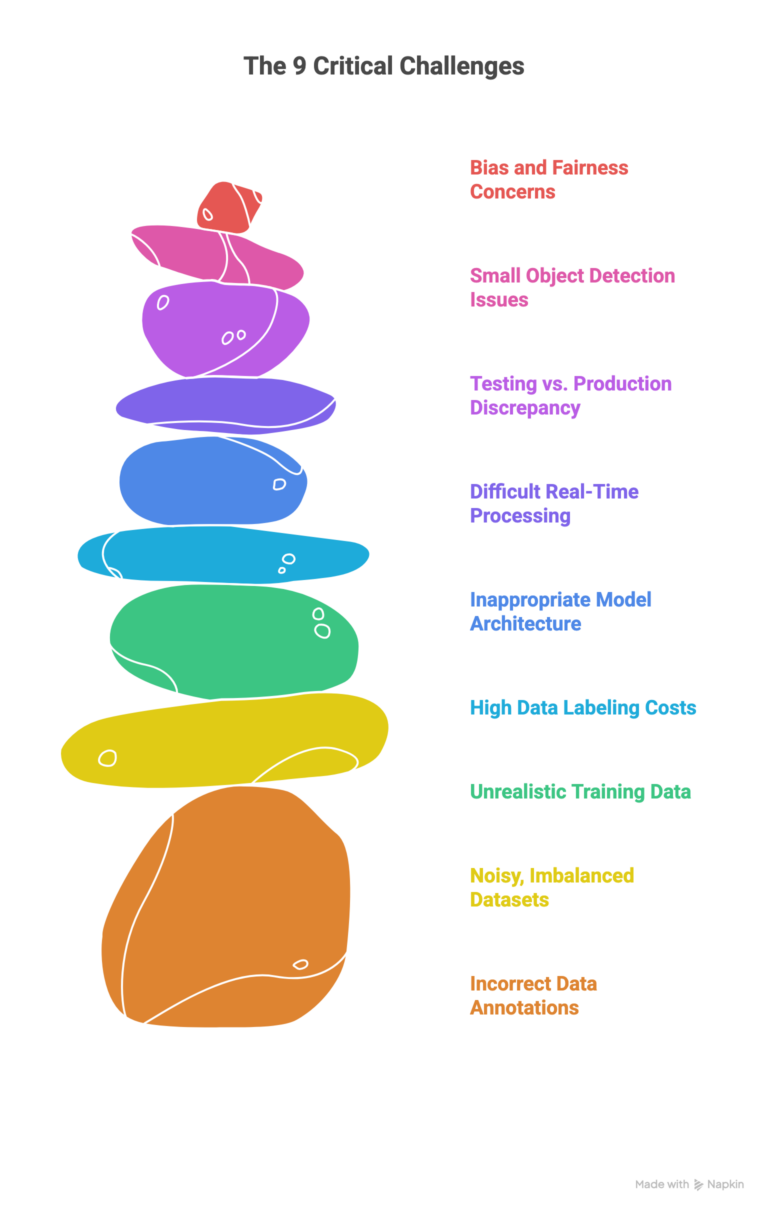

The 9 Critical Challenges (And Real Solutions)

Challenge 1: Your Data Annotations Are Probably Wrong

Let’s start with the foundation: data annotation in AI. Here’s something nobody wants to admit: your training labels are likely to contain errors.

It’s just reality. When you’re paying annotators to label thousands of images, mistakes happen. Someone gets tired, misunderstands an instruction, or can’t quite tell where an object boundary should be. Before you know it, 20-30% of your labels have issues.

One retail company we know spent six months building a shelf-monitoring system, only to discover that 30% of their product labels were wrong. They had to start over.

What Actually Works:

First, stop thinking you can do this alone. Partner with professional data annotation companies to manage projects effectively. Yes, it costs money, but it’s way cheaper than wasting months on bad data.

Second, use annotation AI tools to speed things up. These tools use pre-trained models to create initial labels, then humans correct the mistakes. This approach cuts annotation time by 70-80%.

Third, build quality checks into your workflow. Have senior annotators review junior work. For tricky cases, get multiple people to label the same image and only accept consensus labels.

PRO TIP: Always test your annotation provider with 100 images from YOUR specific domain before committing to thousands. Check if their quality actually meets your standards.

Challenge 2: Noisy, Imbalanced Datasets That Wreck Everything

Real-world data is messy. Really messy. You’ve got sensor noise, compression artifacts, motion blur, and that’s before we even talk about class imbalance.

Noise reduction in images isn’t just a nice-to-have; it’s essential. When noise levels get too high, your object detection accuracy can drop by 10-15%. For medical imaging, where subtle details matter, the impact is even worse.

Then there’s class imbalance. Maybe 99% of your images are normal products, but 1% are defects you desperately need to catch. Your model just learns to predict “normal” for everything and calls it a day with 99% accuracy. Useless.

What Actually Works:

For noise, use preprocessing techniques. You don’t always need fancy deep learning models; sometimes the classic methods are perfectly fine and way faster.

For class imbalance, you have a few options. Use weighted loss functions that penalize mistakes on rare classes more heavily. Oversample your minority classes with aggressive data augmentation. Or try techniques like focal loss that automatically focus on the hard examples your model struggles with.

WARNING: Be careful with aggressive oversampling. If you just copy rare class images too many times, your model will memorize them instead of learning actual patterns. Always validate on realistic test data.

Challenge 3: The Real World Doesn’t Look Like Your Training Data

Your model works great on clean, well-lit lab images. Then you deploy it in the real world, where lighting changes throughout the day, objects get partially hidden, and camera angles vary wildly.

A retail analytics company learned this the hard way. Their people-counting system, trained on bright, overhead cameras, hit 94% accuracy in testing. In actual stores with dim lighting and angled cameras? 67%. They had to start over, collecting data across 15 lighting conditions and 8 camera angles.

What Actually Works:

Data augmentation is your friend, but you need to be smart about it. Don’t just randomly flip and rotate images. Think about what your model will actually see in production.

Will lighting change? Add random brightness and contrast adjustments. Outdoor deployment? Simulate shadows, fog, and glare. Objects getting partially hidden? Use cutout augmentation that randomly blocks parts of images.

The key is making sure your augmentations match real deployment conditions. We have seen teams over-augment with unrealistic transformations that actually hurt performance.

For significant domain shifts, look into domain adaptation techniques. These methods help your model learn from synthetic or easy-to-label data, then adapt to real-world conditions without needing tons of new labels.

Challenge 4: Labeling Data Is Eating Your Budget

AI image labeling costs anywhere from $0.05 per image for simple classification to $50+ per image for complex medical segmentation. For a medium project needing 100,000 annotated images, you’re looking at $20,000-$100,000 just for labels.

Most teams don’t budget for this properly, then panic when they realize how expensive annotation is.

What Actually Works:

Semi-supervised learning is a game-changer. Train your model on a small labeled dataset, use it to generate labels for unlabeled data (keeping only confident predictions), then retrain. One manufacturing company reduced its labeling needs from 50,000 to 5,000 images this way, saving $45,000.

Active learning is another powerful approach. Instead of randomly labeling images, have your model tell you which images would be most valuable to label. This typically reduces labeling needs by 50-70% while achieving the same accuracy.

And here’s something exciting: Foundation models like CLIP can do zero-shot classification out of the box. For some tasks, you might not need custom-labeled data at all.

PRO TIP: Combine active learning with AI annotation tools. Your model selects the most informative images, AI tools create initial labels, and humans just correct errors. This combo is incredibly efficient.

Challenge 5: Choosing the Wrong Model Architecture

With hundreds of model architectures out there, how do you choose? CNNs, Vision Transformers, hybrid models, the options are overwhelming.

Here’s the simple truth: for most tasks with limited data, start with a pre-trained CNN like EfficientNet or ResNet. They work well, train quickly, and don’t need massive datasets.

Vision Transformers are amazing, but need lots of data and compute. Unless you have 100,000+ images and powerful GPUs, stick with CNNs or hybrid models.

What Actually Works:

Transfer learning is your superpower. Never train from scratch unless you have a really good reason. Start with models pre-trained on ImageNet, freeze the early layers, and just train the final layers for your task.

This approach needs 10-100x less data and trains 5-10x faster. It’s basically a free performance.

For deployment, you’ll likely need model compression. Techniques like quantization can make your model 4x smaller and 2-4x faster with less than 1% accuracy loss. This is essential for edge devices and mobile apps.

Challenge 6: Real-Time Processing Is Harder Than It Looks

Your model works fine when processing images one at a time on a powerful GPU. But real-time image processing? That’s a different beast entirely.

Autonomous vehicles need to process camera feeds at 30+ frames per second. Surveillance systems monitor dozens of cameras simultaneously. Mobile apps need to work on phones with limited computing power.

What Actually Works:

Optimize relentlessly. Use efficient architectures specifically designed for real-time use. Apply quantization to reduce model size. Consider edge AI deployment with specialized hardware like NVIDIA Jetson or Google Coral.

Sometimes you don’t need to process every frame. For many applications, processing every 3rd or 5th frame is perfectly adequate and cuts compute requirements dramatically.

And here’s a pro move: use model ensembling carefully. Running multiple models seems slow, but if you run a fast model on every frame and a slow, accurate model only when the fast model is uncertain, you can actually improve both speed and accuracy.

Challenge 7: Your Model Works in Testing, Fails in Production

This is perhaps the most frustrating challenge. Your model crushes your test set with 95% accuracy, then completely fails when deployed. Why?

Distribution shift. Your training data doesn’t match production data. Maybe it was collected in summer, but it’s winter now. Maybe you tested on high-quality cameras, but production uses cheap ones. Maybe your annotators only labeled a specific subset of scenarios.

What Actually Works:

Build your test set carefully. It should reflect actual deployment conditions, not just be a random split of your training data. Include edge cases, different times of day, various weather conditions, whatever your model will actually see.

Consider continual learning systems that update your model as new data comes in. Monitor model performance in production and retrain when accuracy drops.

And do a pilot deployment before going all-in. Test on a small subset of real users or locations, catch problems early, then scale gradually.

WARNING: Never assume your test set accuracy will transfer to production. Always run a pilot with real-world monitoring before full deployment.

Challenge 8: Detecting Small Objects Is Surprisingly Hard

Trying to detect faces in a crowd? Small defects on products? Distant vehicles in traffic? Small object detection is one of the toughest computer vision challenges.

When you resize images for model input, small objects lose crucial details. A 5×5 pixel face might become just a single pixel after downsampling.

What Actually Works:

Multi-scale architectures like Feature Pyramid Networks (FPN) help by processing images at multiple resolutions. Attention mechanisms can help the model focus on small, important regions.

But honestly? Sometimes you just need higher resolution input and more powerful hardware. There’s no magic bullet here; small objects are genuinely difficult.

One practical trick: if you know roughly where small objects appear (like faces are typically in upper portions of surveillance footage), crop those regions and process them separately at higher resolution.

Challenge 9: Bias, Fairness, and the Ethics Problem

Here’s an uncomfortable truth: computer vision systems often perpetuate and amplify existing biases. Face recognition that works great on white faces but fails on darker skin tones. Hiring tools that discriminate based on appearance. Medical systems are trained primarily on one demographic.

This isn’t just an ethics problem; it’s a legal and business risk. The EU AI Act now requires fairness audits for high-risk systems.

What Actually Works:

Start with diverse, representative datasets. If you’re building a face recognition system, ensure your training data includes people of all races, ages, and genders.

Audit your models for bias before deployment. Test performance across different demographic groups. If you find disparities, don’t just shrug; fix them through targeted data collection or algorithmic interventions.

Use explainable AI techniques to understand what your model is actually learning. Tools like GradCAM show which parts of images influence predictions, helping you catch if your model is learning spurious correlations.

Conclusion

Computer vision is incredibly powerful, but it’s not magic. Success comes from understanding these fundamental challenges and applying proven solutions systematically.

Don’t try to solve everything at once. Start with data quality, get your annotations right. Then tackle your specific deployment challenges one at a time. Use transfer learning. Monitor production performance. Iterate constantly.

The teams that succeed aren’t necessarily the ones with the fanciest algorithms. They’re the ones who understand that computer vision is 20% model architecture and 80% everything else, data quality, deployment strategy, and continuous improvement.

FAQ

What are the biggest computer vision challenges in 2025?

The top challenges are data quality and annotation issues, handling noisy and imbalanced datasets, dealing with visual diversity in real-world conditions, scarcity of labeled training data, real-time processing requirements, and ensuring model fairness.

What skills do I need to become a computer vision engineer?

Essential computer vision engineer skills include deep learning fundamentals, proficiency in PyTorch or TensorFlow, experience with data preprocessing and augmentation, understanding of deployment and optimization, debugging capabilities, and the ability to work with annotation workflows and communicate with non-technical stakeholders.

How can I reduce noise in images for better model performance?

For noise reduction in images, use preprocessing techniques like non-local means denoising, bilateral filtering, or Gaussian blur. For production systems, OpenCV's built-in denoising functions work well. For severe noise, consider deep learning-based denoising models, though they require more computational resources.

Table of contents

Related Articles